Latent Dirichlet Allocation (LDA) and Amazon Review Analysis

by Saikat Mazumder

Topic modelling is a statistical modelling technique that is used to extract the relevant subjects or topics that may appear in a document or piece of text. This is one of the frequently used text analysis techniques under Natural Language Processing (NLP) where we search for the semantic structure inside a text. This is an unsupervised Machine Learning Technique that can cluster the “topics” or the group of words that can explain a document.

Using NLP for text analysis can really make life easy for the people working in a business. NLP can be used in vast areas of business, e.g. Chatbot answering customer’s queries, Sentiment Analysis from customer’s review, helping hiring and recruitment process etc. Topic Modeling is one such technique.

There are mainly two types of topic analysis techniques used - one is Topic Modelling which is unsupervised and doesn’t need any training. Another is Topic Classification which is a supervised learning technique and for the classification model training is required for higher accuracy in the result.

Since topic modelling doesn’t require any training this works faster than other models where the model needs to train, but the accuracy of this may be a little lower.

Where can we use it?

Topic modelling has various uses - for example looking for patterns of words inside a document or words used in customer reviews. In uniQin.ai we have used topic modelling for analysing customer reviews for a few products in Amazon (check out the blog on How the Feature Store of uniQin.ai will help other Data Science Projects to get more insight). One of the most used topic modelling techniques is Latent Dirichlet Allocation (LDA), it is based on distributional semantics. This is a method for categorising semantic similarity between items on their distribution properties within sample text.

Latent Dirichlet Allocation (LDA)

LDA is based on the distributional hypothesis and statistical mixture hypothesis. LDA takes documents as a combination of different topics that include words with certain probabilities. LDA makes two assumptions, documents are combinations of different topics, and topics are combinations of different words (tokenized words).

More on the algorithm can be read from here :

https://en.wikipedia.org/wiki/Latent_Dirichlet_allocation

https://www.jmlr.org/papers/volume3/blei03a/blei03a.pdf

Topic Modelling for Amazon Reviews:

Many times sellers are selling different products on Amazon or another e-retail platform, where they need to analyse customers’ reviews. This is essential for a business to know what are the topics customers are mainly talking about. In uniQin.ai we scrapped the customer’s reviews and analysed the topic using LDA. Let’s go through the procedure.

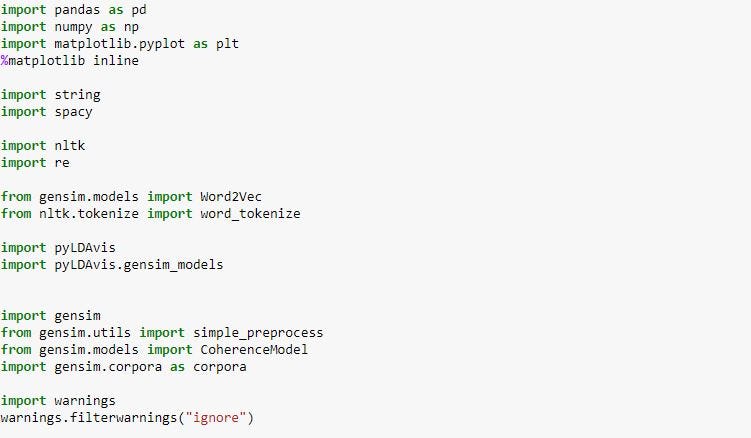

We used gensim, spacy for preprocessing and model building, and pyLDAvis for plotting.

Below are the imports -

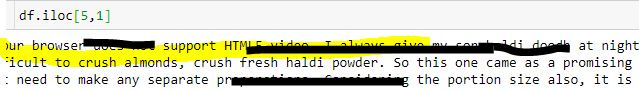

We scrap the review and make that as a pandas dataframe for future use. The reviews are as below -

(The review has been distorted for privacy concerns)

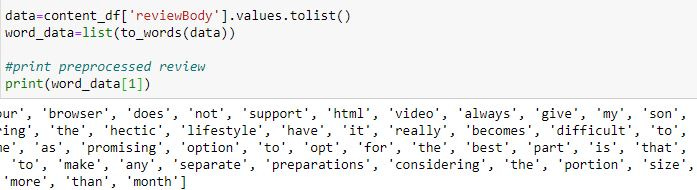

For the preprocessing of the words - first, we use the gensim library’s simple_preprocess function to tokenize each word. We can also alternatively use nltk library’s word_tokenize for the same purpose.

(https://tedboy.github.io/nlps/generated/generated/gensim.utils.simple_preprocess.html)

Below is the review after tokenizing.

Then we remove stopwords and punctuations and special symbols from the text. We used stopwords from nltk for this.

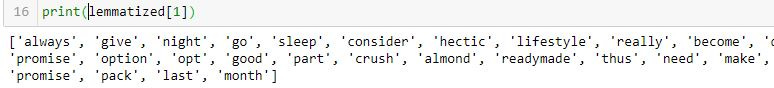

Lemmatization - There is a difference between the word stemming and lemmatization. Stemming actually cuts the word from the beginning or at the end and takes into account the common phrases - e.g., studies and studying changes to stud after stemming. To extract the proper lemmatization, it is necessary to look at the morphological analysis of each word. If we lemmatize studies or studying it’ll change to study after transformation.

In our case, we use spacy for lemmatization.

Gensim creates a unique id for each word in the document. The produced corpus is shown below as a mapping of word_id and word_frequency.

You can also see the human-readable corpus -

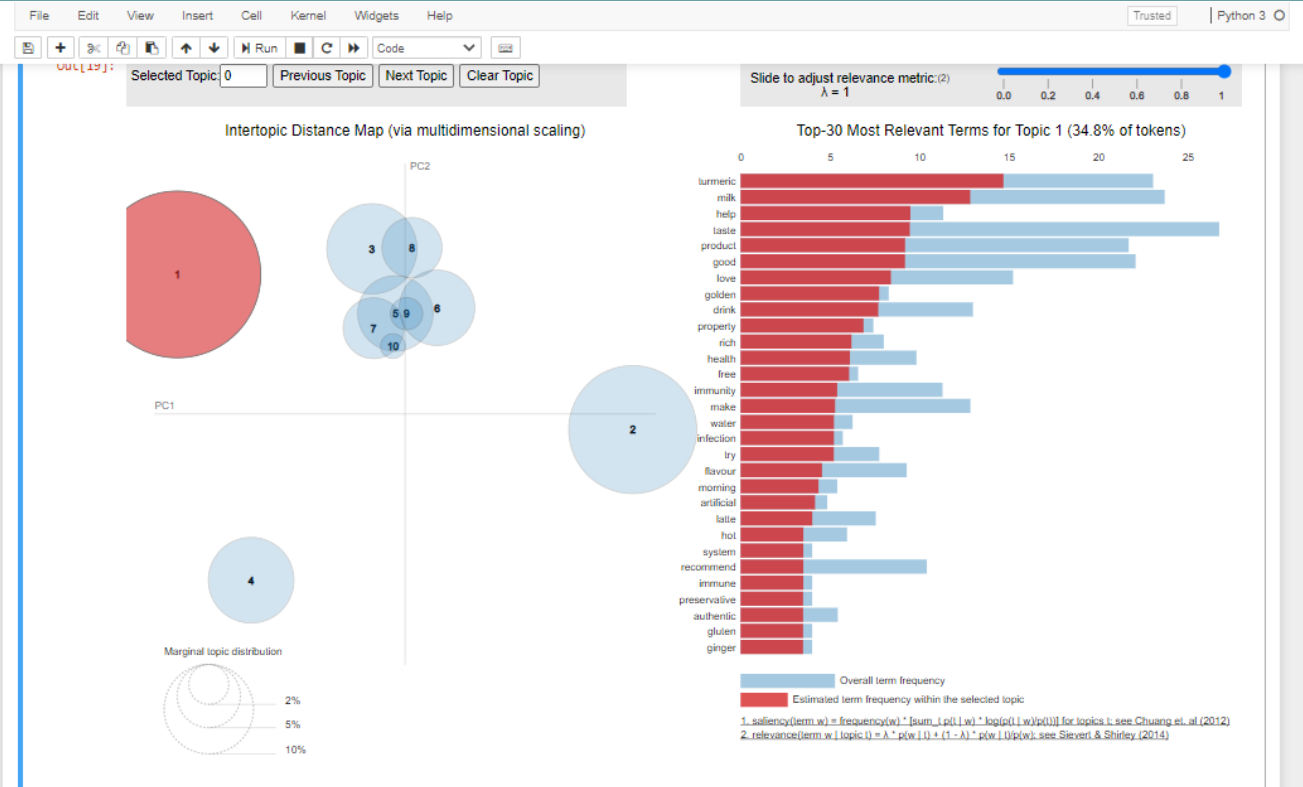

Now we build the topic model. We'll define 10 topics at the start. The hyperparameter alpha affects the sparsity of the document-topic (theta) distributions. Similarly, the hyperparameter eta can be specified, which affects the topic-word distribution's sparsity.

After that, we visualise the topics. The pyLDAvis library has excellent interactive visualization features

The output of the topic modelling

Loved the blog? Do check out another awesome blog by Saikat Mazumder on Dynamic Pricing And How It Helps Reshape Businesses.

Struggling to price your products? Book a demo with our pricing experts now!!